Here is the continuation of our series of articles on camera calibration. Don't forget to read the first part!

Cameras are modeling the real world by projecting the 3D objects onto the 2D image plane. But what if we really want to have 3D information? Add more cameras!

Stereo image. Using two cameras, we can obtain a stereo image. The rationale behind this is to imitate how a person uses two eyes to estimate distance to objects. A mundane example of using stereo technology is the “portrait mode” in a modern smartphone. This effect gives the image a 3D feel: the object in focus looks sharp, while the rest of the image is blurred.

Triangulation. Using two cameras separated in space and observing one object, we can determine the distance to the object, as well as its size and speed in real-world units. For triangulation, cameras can be placed at any distance from each other (the important thing is that the captured object should be in their common field of view).

As an example somewhat outside the main topic — structure from motion (SfM). We can treat frames taken by the same camera from multiple locations similar to a set of consecutive stereo-pair images. SfM is the process of estimating the 3D structure of a scene from a set of 2D images. SfM is used in many applications, such as 3D scanning, augmented reality, and visual simultaneous localization and mapping (vSLAM).

For example, by flying around an object with a drone, we can build a 3D model of it even if the drone has only one camera.

More information from multiple cameras. Multiple cameras are installed when it is important to get more information about the object. Imagine how in the sorting center, a system of several cameras pointed at the belt helps to determine the volume of parcels, the consistency of stickers, and other parameters.

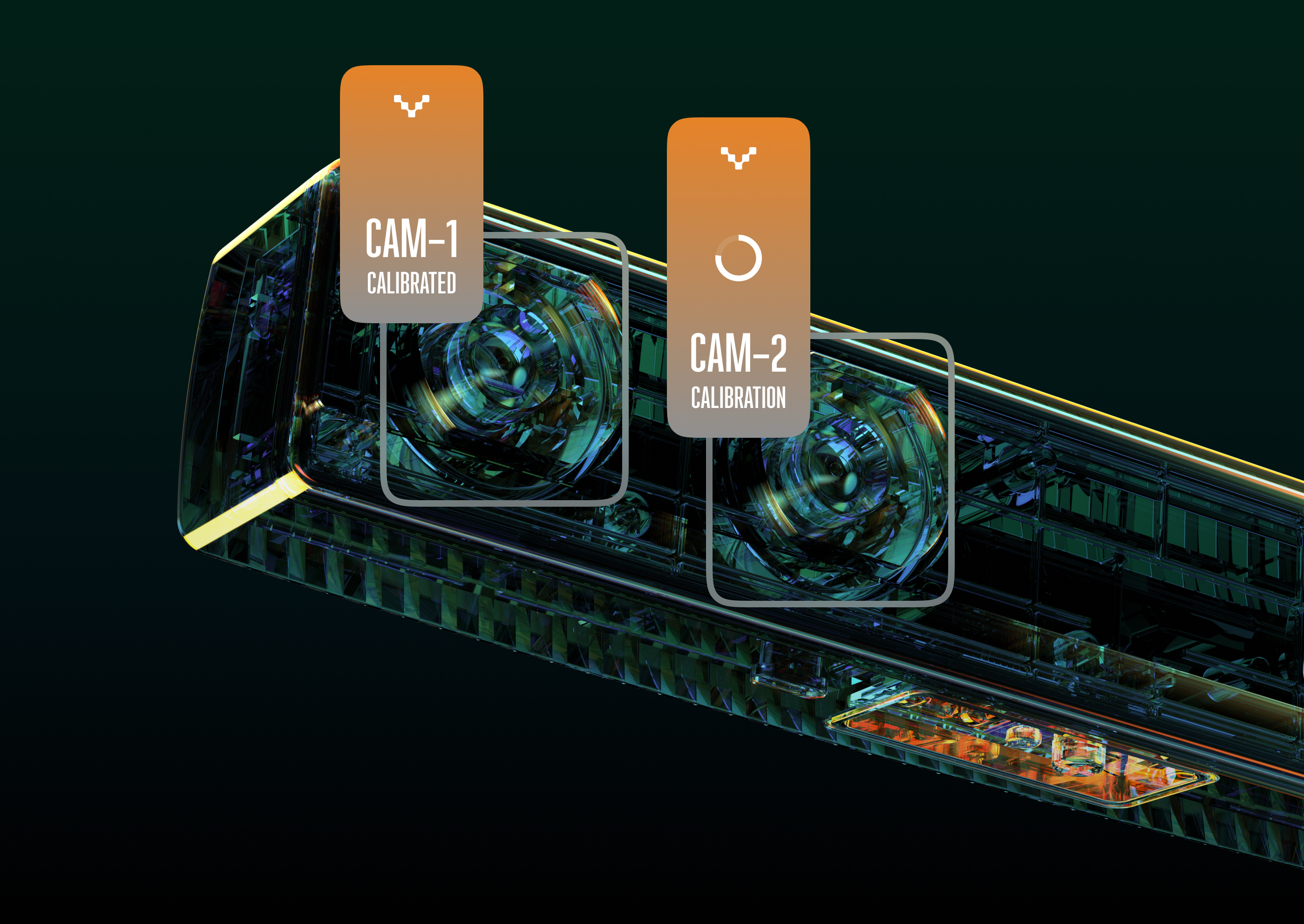

For the volume or dimention measurement two or more cameras will be less effective if they are not calibrated beforehand. Otherwise, the computer vision algorithm will not be able to match the images from the cameras.

CV algorithms that utilize, for example, stereo pair rely on the fact that each object is captured at exactly the same time. The cameras have a shutter — a device (or program) that “shoots” the image from the sensor. When you take a pair of cameras where each has its own shutter you’re not guaranteed to have shutters snap at exactly the same time. They will look more like the eyes of Hypnotoad:

But if the camera is not mounted indoors, but moves with the car? For example, if the camera shutter is triggered at a speed of 1/30th of a second, then at a speed of 100 km/h a mismatch of shutter movements could cost us a 3 meter error!

Luckily, there are hardware-backed solutions for time synchronization that help Hypnotoad’s eyes blink in sync.

For calibrating multiple cameras, as for calibrating a single camera, it is easy to use a pattern with known dimensions. By moving the pattern in the shared field of view of the cameras, we can calibrate them using a triangulation algorithm.

However, we don't always have the opportunity to cover the cameras’ shared field of view with a pattern.

There are several cameras, and the shared FoV is relatively small. This may be the case in a supermarket where there are several cameras in the aisle, but all of them have only a narrow common field of view. This causes a problem if the resolution of the cameras is low (in other words, the total field of view of each two cameras has only a tiny common area of pixels on the matrix). In such a case, you have to use a large pattern, which, ideally, should fill the total field of view of the cameras — this helps to reduce the calibration error.

No pattern. Sometimes we cannot use a pattern and have to work only with what we see on a frame. It may be tempting to use e.g. floor tiles as a pattern — after all, they have nice visible borders and fixed size. However, for calibration, it is important to know that both cameras see the same point on the pattern and we can easily confuse two adjacent tile corners. For a default calibration pattern, we know the exact number of key points — so its repetitiveness actually helps.

If the pattern is repetitive or indistinct (there is no pattern on the wall at all), it’s way harder to calibrate the cameras.

To solve this some cameras use active stereo technology. The technology augments the basic of stereo pair with an IR projector. The projector emits invisible to the naked eye IR pattern that improves the accuracy and precision of the depth estimation in scenes with poor textures. Read about it on the blog!

We at OpenCV.ai are professionally engaged in the development of computer vision and artificial intelligence systems. Come to us to discuss your project, and your requirements for multi-camera calibration.