In recent years, the field of 3D computer vision has undergone significant changes, especially in image rendering. An important task in this field is Novel View Synthesis, which aims to generate an image of a scene from a novel viewpoint using a sparse set of images of that scene.

One notable breakthrough in this area is the NeRF model (Neural Radiance Fields), which uses a neural network and volume rendering techniques to generate novel views of the scene. The input for NeRF is a set of images with corresponding camera positions (extrinsic matrix). The model itself, essentially, is a special representation of a given 3D scene that consists of a continuous set of points with predicted density and color at each one.

The NeRF model consists of two parts: volume rendering and neural network. At its core, the volume rendering algorithm uses a probabilistic representation of the light passing through a scene to produce the color at a certain image pixel. In turn, the neural network learns the mapping between 3D point coordinates and viewing direction into color and density. Now, let’s look at NeRF’s components in more detail.

Usually, volume rendering uses an integral calculation to determine the color of a pixel. However, the authors of NeRF introduced a faster, more discrete form of volume rendering. From every camera position, we cast a ray towards the image plane, and we sample a set of points on this ray. These points divide the ray into several equal segments. To compute the color of the pixel, we must estimate two parameters. The first value is the transmittance along the ray before point ti, which can be interpreted as the amount of light blocked before the ray's point. The second is the light contribution by a ray segment (ti, ti+1). The product of these two parameters is known as alpha-compositing and interpreted as color weights on the ray. We estimate the color as a weighted sum of colors at each point:

Density represents the value that determines how much light is absorbed at each point. Higher density means a higher probability of the ray hitting the object’s surface. Our task is to find the missing parameters of color ci and density at each point to calculate the weights of the sum. This is where the neural network comes into play.

As mentioned earlier, a neural network is used to map coordinates and camera view angles to density and color for each point. The NN architecture is relatively simple, consisting of a stack of several fully-connected layers or MLPs. The NeRF model comprises two sub-neural networks: one for density prediction and another for color prediction.

The input of the network is composed of coordinates and camera viewing direction, which are passed through a positional encoding layer to create a higher dimensional input. The positional encoding is used to generate high-level features for the neural network to retain small details of the scene.

During NeRF training, preprocessed input 3D coordinates are first passed through a density network, which generates density predictions and embedding vectors. These embeddings, along with the camera view angle, are then fed into a color network, producing color predictions.

To optimize the weights of these neural networks, we only use one color loss function. This loss measures the difference between the RGB color values of a rendered pixel and the color values of the corresponding pixel in the target image. Specifically, the rendering loss is defined as the sum of the mean squared error (MSE) between the rendered color and the ground truth color for each pixel.

The training objective of NeRF aims to maximize the density of points where the ray intersects the object's surface while accurately predicting its color. It's important to note that the density branch is not directly optimized during the training process, as information about the scene's density or depth is not available. Instead, the density parameter is indirectly trained when optimizing the color network.

If the Density MLP is not trained correctly, it could result in errors in scene rendering, which may lead to artifacts such as fog or incorrect surface appearance. This is because density is a critical parameter that determines how light interacts with the scene. Incorrect density values can cause the absorption of an incorrect amount of light.

It's crucial to emphasize that the original NeRF and many other NeRF-based approaches are typically trained for a specific scene and lack generation ability for other scenes. Nevertheless, some methods, such as MVSNeRF address this limitation by incorporating additional convolutional neural networks.

The training time for NeRF models can be quite lengthy, often taking dozens of hours to train for a single scene. However, it is worth mentioning that due to ongoing research advancements, there are now methods such as Instant-NGP capable of training NeRF models in just a few minutes.

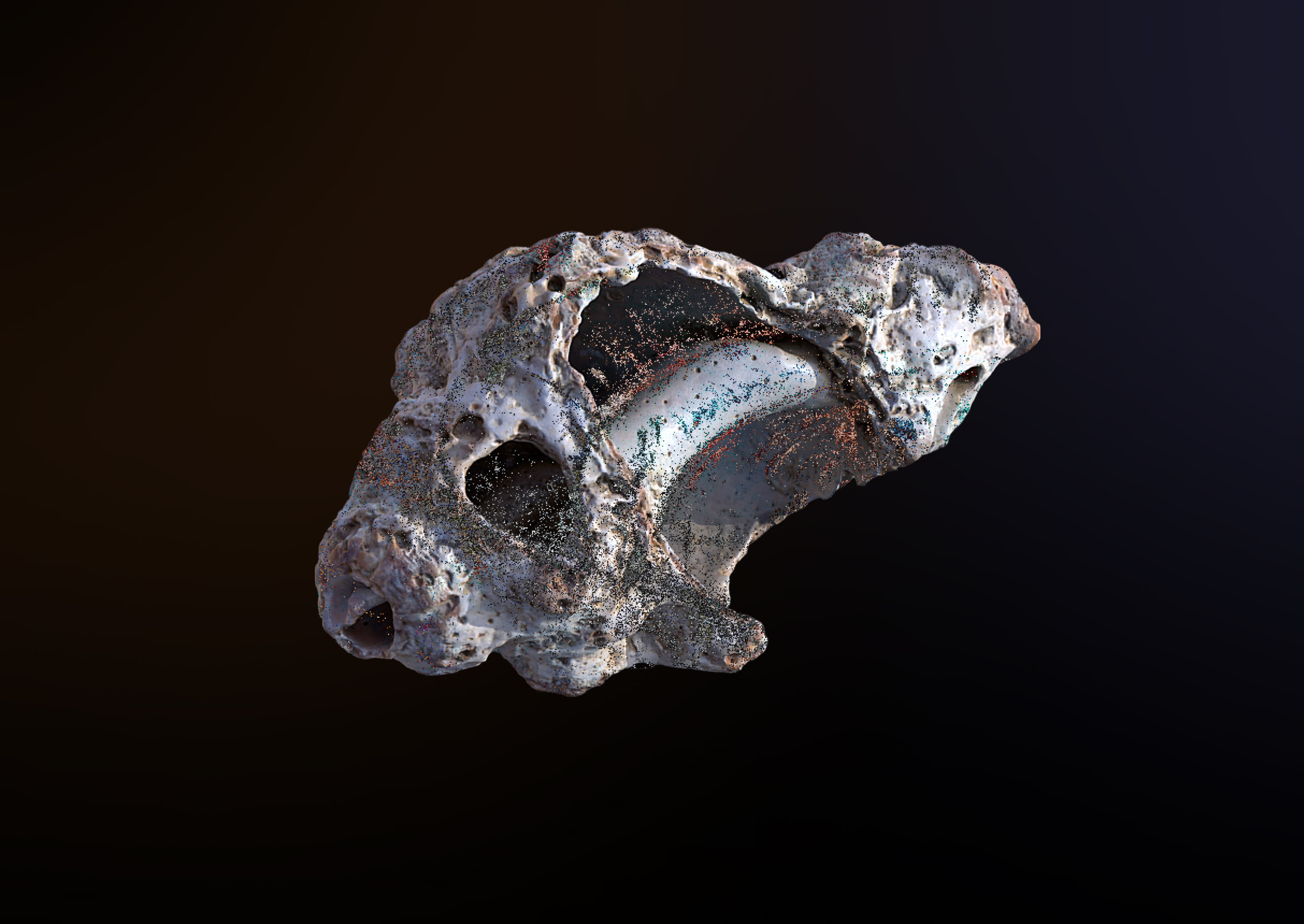

NeRF represents the 3D scene as a continuous 3D function, departing from the conventional use of meshes or point clouds in traditional methods. Instead, NeRF employs a unique approach. It considers the scene as a composition of infinitesimally small volumes, each assigned its own radiance or color value. These volumes collectively form what is known as a "neural radiance field.”

During the training phase, NeRF adapts to a single scene and constructs its representation through the weights of a MLP. By evaluating the neural network at different 3D coordinates and viewpoints, NeRF can estimate the radiance or color value for each volume element in the scene from various perspectives.

The concept of Neural Radiance Fields has great potential in various fields of work, such as VR or AR, game development, 3D graphics, and 3D reconstruction. However, like any other method, Nerf has its strengths and weaknesses.

Many limitations of the original NeRF model have been addressed by researchers, leading to modern NeRF-based solutions that work faster (Instant-NGP, NSVF, KiloNeRF) or operate without known camera parameters (NeRF—) or even offer generalization to multiple scenes (MVSNeRF). For further information about these improved NeRF models, please refer to the provided links.