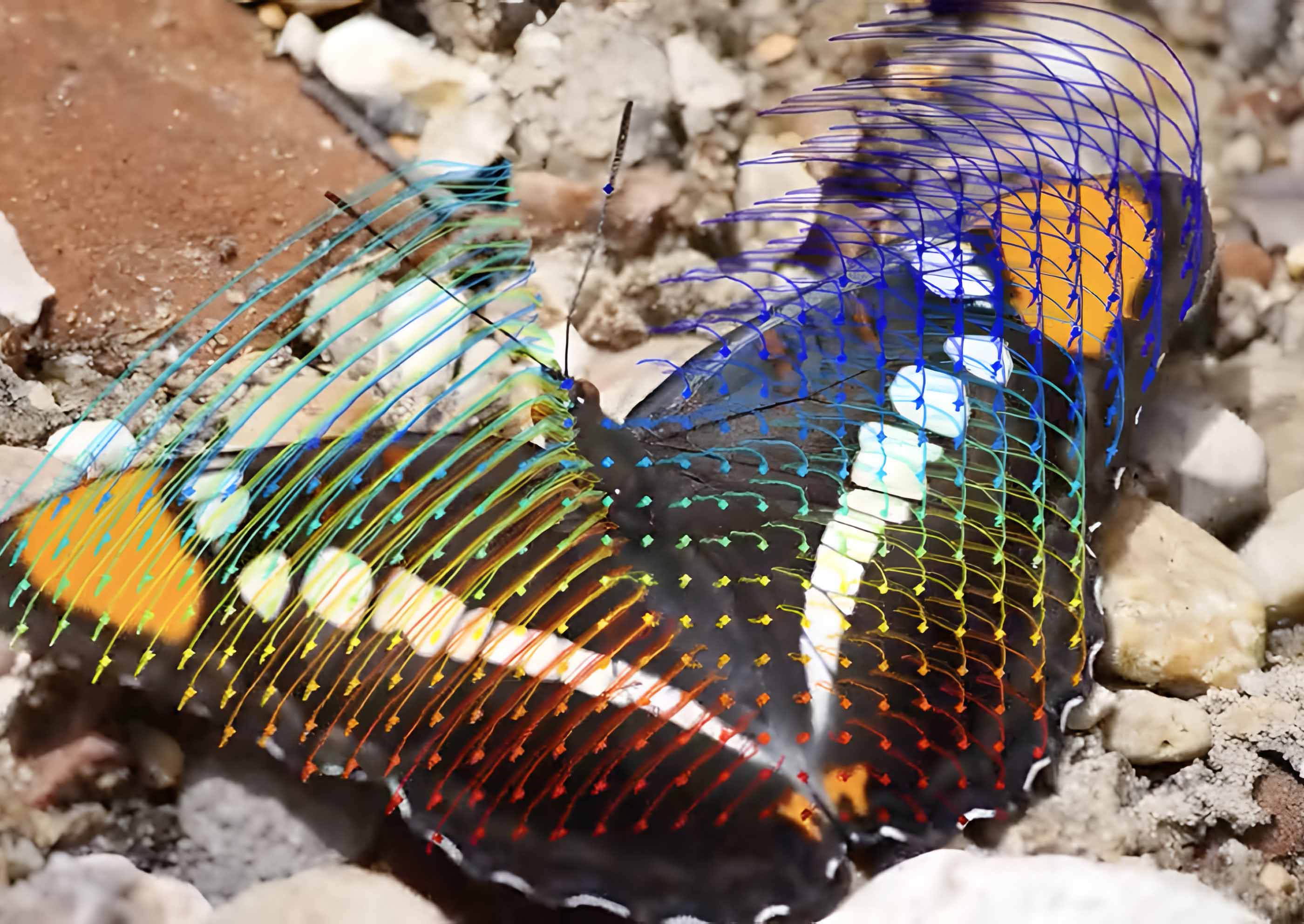

Last week Cornell University, Google Research, and UC Berkeley published a paper with an intriguing title "Tracking Everything Everywhere All at Once". It proposes a solution for the track any point problem. As the name hints, it tracks everything everywhere - meaning pixels across all frames, even if they are occluded. See the beautiful visualizations from the paper’s OmniMotion website:

So, if you select a specific pixel, you can find its coordinates on all previous frames, as well as on all next frames. That is fantastic! However, we believe (while no code is available) this pixel should be a good feature to track, e.g. corner, not from single-color or low-textured areas.

The first thing is that this algorithm is intended to work on the whole video all at once. It runs an optimization process given all video frames, so it is not designed for real-time tracking. However, it might be useful for the analysis of surveillance videos or sports analytics.

Second thing: it needs to run an external algorithm for supervision to perform the track optimization process. The authors compute the RAFT optical flow for all frame pairs before the optimization.

The authors propose to map each object pixel from every frame to a single common 3D space (the canonical 3D volume G in the paper). Thus one point in this space corresponds to a 3D trajectory across time. They use two algorithms to create this 3D space:

NeRF to model the volume density and color prediction.

Invertible neural networks capture the camera parameters and scene motion from different frames.

The algorithm overview is shown in the picture below:

The loss functions are flow loss, photometric loss, and penalty for large displacement between consecutive 3D point locations to ensure temporal smoothness of the 3D motion. OmniMotion compares tracking results on the target TAP-Vid benchmark and shows an impressive improvement.

Object tracking remains one of the hard computer vision problems. This paper significantly advances the state-of-the-art using complex but elegant algorithms. It is a great research paper - and we are looking forward to trying it live!

Paper: https://arxiv.org/pdf/2306.05422.pdf