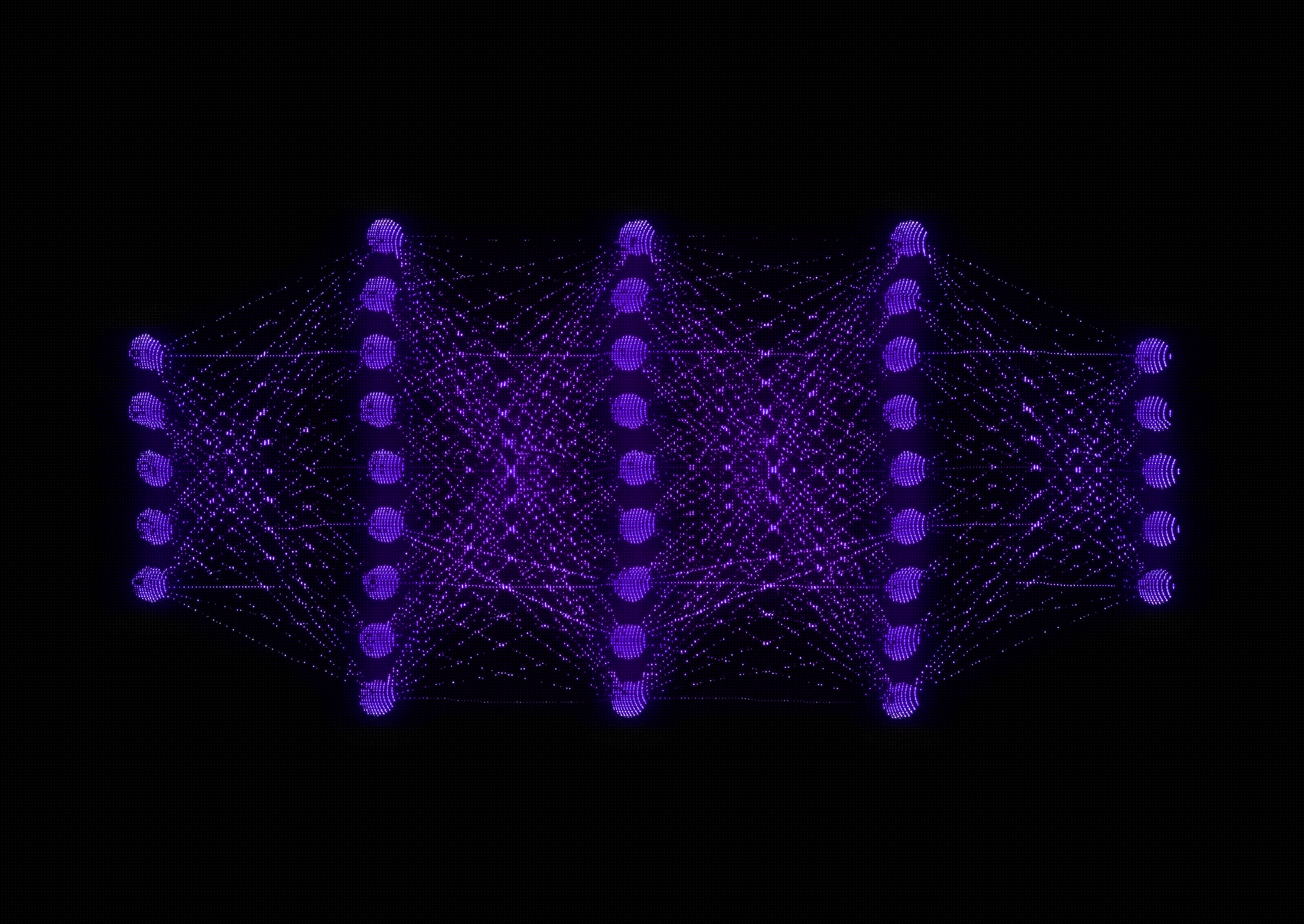

Large neural networks do a good job but often need lots of parameters to work. Training these extensive networks, like transformers, requires lots of GPU memory. Sometimes, more than one GPU is needed, and practitioners must use several GPUs, buy powerful equipment, or use cloud solutions. We took an in-depth look at the such various hardware options for computer vision projects in our article. Besides, the GPU has to store the activations from all the network's layers to do backpropagation during training, which takes up a lot of memory. But what if we can free the memory from storing activations?

Then, we could train with bigger batches or more parameters, increasing the network accuracy and quality. The paper "The Reversible Residual Network: Backpropagation Without Storing Activations" suggests a way to do this. Let's explore reversible networks and how they can save GPU memory when training.

To calculate gradients during backpropagation, it's essential to have both the neural network's weights and the activations of its layers. That's why modern deep learning frameworks save the activations of each layer in memory during the forward pass. However, reversible neural networks can compute the activations of a layer (I) using the activations from the deeper layer (i+1) thanks to their unique architecture.

Since backpropagation begins at the neural network's end, reversible networks can avoid saving the activations for each layer up to the last layer, which helps to save memory. The NICE paper introduced a primary type of reversible layer known as an “additive coupling layer.” This process divides a neural network layer's input (x) into two segments, x1 and x2. Then, it produces the output of the layer as follows:

where m represents any differentiable function, like an MLP (Multi-Layer Perceptron) or convolutional layer, which is then processed through batch normalization and activated by a ReLU function. The outputs of the layer, y1 and y2, are concatenated together.

With the output activation y, it's simple to calculate its original input:

The authors suggest applying this technique to a ResNet (Residual Neural Network) architecture. Let's explore how this approach modifies the standard residual block:

So, the output of the layer is calculated as follows:

From these outputs, we can calculate the layer's original inputs:

where F and G function similarly to the operations in a ResNet, dividing the input x by splitting the channels. Notably, F and G in a RevNet (reversible residual network) accept tensors with fewer channels than F in the traditional Residual Network.

While the idea of making network layers reversible is compelling in its simplicity, there are a few crucial points to consider:

1. Not all layers can be made reversible. Specifically, layers with a stride greater than 1 are non-reversible since they discard input information. These layers remain unchanged, storing their activations typically during the forward pass.

2. For reversible layers, activations must be manually cleared from memory to achieve efficient memory use during backpropagation. This is because deep learning frameworks typically save these activations by default. Also, the backpropagation process must be customized, with examples available for TensorFlow and PyTorch.

3. Training with reversible layers requires more computations due to additional calculation of input activations. The authors note an approximate 50% increase in computational overhead.

4. There's a potential divergence between the calculated input activations and those stored during the forward pass caused by numerical errors common with float32 data types. This difference might affect the performance of some neural networks.

Back to the results, the researchers compared the accuracy of classification tasks on the CIFAR, CIFAR-100, and ImageNet datasets between RevNet and ResNet. To align RevNet with ResNet in terms of the number of learnable parameters, the authors reduced the number of residual blocks before downsampling and increased the channels within those blocks. Below is the comparative table:

And the accuracy results:

Reversible Residual Network achieves nearly identical accuracy to Residual Network on both datasets while significantly enhancing memory efficiency. Although the exact memory savings in megabytes weren't quantified, a subsequent article will explore Dr2Net, a newer reversible network model, which further reduces memory requirements over time.

Reversible residual networks not only enhance memory efficiency but also contribute significantly to the field of computer vision, a key technology behind many of the features we use daily on our smartphones. For an in-depth look at how computer vision powers applications within your smartphone, enhancing everything from security to photography, check out our exploration on Computer Vision Applications in Your Smartphone.

Reversible residual networks offer a promising approach to making neural network training more memory-efficient, showcasing the continuous evolution of AI technology towards more sustainable and accessible solutions. Explore the possibilities AI integration can bring to your projects across multiple sectors with OpenCV.ai's expertise in computer vision services. Our team is committed to driving innovation with AI Services to revolutionize practices in diverse industries.